Caching data is vital for high-performance web applications. However, cache retrieval code can get messy and hard to test without the proper abstractions. In this article, we’ll start an ugly multilevel cache and progressively refine it into something maintainable and testable.

The source code for this article is available at the Gigi Labs BitBucket repository.

Naïve Multilevel Cache Retrieval

A multilevel cache is just a collection of separate caches, listed in order of speed. We typically try to retrieve from the fastest cache first, and failing that, we try the second fastest; and so on.

For the example in this article we’ll use a simple two-level cache where:

- The first-level cache is a MemoryCache;

- The second-level cache is Redis.

We’re going to build a Web API method that retrieves a list of supported languages. We’ll prepare this data in Redis (e.g. using the command SADD languages en mt) but will leave the MemoryCache empty (so it will have to fall back to the Redis cache).

A simple implementation looks something like this:

public class LanguagesController : ApiController

{

// GET api/languages

public async Task<IEnumerable<string>> GetAsync()

{

// retrieve from MemoryCache

var valueObj = MemoryCache.Default.Get("languages");

if (valueObj != null)

return valueObj as List<string>;

else

{

// retrieve from Redis

var conn = await ConnectionMultiplexer.ConnectAsync("localhost:6379");

var db = conn.GetDatabase(0);

var redisSet = await db.SetMembersAsync("languages");

if (redisSet == null)

return null;

else

return redisSet.Select(item => item.ToString()).ToList();

}

}

}

Note: this is not the best way to create a Redis client connection, but is presented this way for the sake of simplicity.

Data Access Repositories and Multilevel Cache Abstraction

The controller method in the previous section is having to deal with cache fallback logic as well as data access logic that isn’t really its job (see Single Responsibility Principle). This results in bloated controllers, especially if we add additional cache levels (e.g. fall back to database for third-level cache).

To alleviate this, the first thing we should do is move data access logic into repositories (this is called the Repository pattern). So for MemoryCache we do this:

public class MemoryCacheRepository : IMemoryCacheRepository

{

public Task<List<string>> GetLanguagesAsync()

{

var valueObj = MemoryCache.Default.Get("languages");

var value = valueObj as List<string>;

return Task.FromResult(value);

}

}

…and for Redis we have this instead:

public class RedisCacheRepository : IRedisCacheRepository

{

public async Task<List<string>> GetLanguagesAsync()

{

var conn = await ConnectionMultiplexer.ConnectAsync("localhost:6379");

var db = conn.GetDatabase(0);

var redisSet = await db.SetMembersAsync("languages");

if (redisSet == null)

return null;

else

return redisSet.Select(item => item.ToString()).ToList();

}

}

The repositories each implement their own interfaces, to prepare for dependency injection which is one of our end goals (we’ll get to that later):

public interface IMemoryCacheRepository

{

Task<List<string>> GetLanguagesAsync();

}

public interface IRedisCacheRepository

{

Task<List<string>> GetLanguagesAsync();

}

For this simple example, the interfaces look almost identical. If your caches are going to be identical then you can take this article further and simplify things even more. However, I’m not assuming that this is true in general; you might not want to have a multilevel cache everywhere.

Let’s also add a new class to abstract the fallback logic:

public class MultiLevelCache

{

public async Task<T> GetAsync<T>(params Task<T>[] tasks) where T : class

{

foreach(var task in tasks)

{

var retrievedValue = await task;

if (retrievedValue != null)

return retrievedValue;

}

return null;

}

}

Basically this allows us to pass in a number of tasks, each corresponding to a cache lookup. Whenever a cache lookup returns null, we know it’s a cache miss, which is why we need the where T : class restriction. In that case we try the next cache level, until we finally run out of options and just return null to the calling code.

This class is async-only to encourage asynchronous retrieval where possible. Synchronous retrieval can use Task.FromResult() (as the MemoryCache retrieval shown earlier does) to conform with this interface.

We can now refactor our controller method into something much simpler:

public async Task<IEnumerable<string>> GetAsync()

{

var memoryCacheRepository = new MemoryCacheRepository();

var redisCacheRepository = new RedisCacheRepository();

var cache = new MultiLevelCache();

var languages = await cache.GetAsync(

memoryCacheRepository.GetLanguagesAsync(),

redisCacheRepository.GetLanguagesAsync()

);

return languages;

}

The variable declarations will go away once we introduce dependency injection.

Multilevel Cache Repository

The code looks a lot neater now, but it is still not testable. We’re still technically calling cache retrieval logic from the controller. A cache depends on external resources (e.g. databases) and also potentially on time (if expiry is used), and that’s not good for unit tests.

A cache is not very different from the more tangible data sources (such as Redis or a database). With them it shares the function of retrieving data and the nature of relying on resources external to the application, which makes it incompatible with unit testing. A multilevel cache has the additional property that it is an abstraction for the underlying data sources, and is thus itself a good candidate for the repository pattern:

We can now move all our cache retrieval logic into a new MultiLevelCacheRepository class:

public class MultiLevelCacheRepository : IMultiLevelCacheRepository

{

public async Task<List<string>> GetLanguagesAsync()

{

var memoryCacheRepository = new MemoryCacheRepository();

var redisCacheRepository = new RedisCacheRepository();

var cache = new MultiLevelCache();

var languages = await cache.GetAsync(

memoryCacheRepository.GetLanguagesAsync(),

redisCacheRepository.GetLanguagesAsync()

);

return languages;

}

}

Our controller now needs only talk to this repository, and carry out any necessary logic after retrieval (in this case we don’t have any):

public async Task<IEnumerable<string>> GetAsync()

{

var repo = new MultiLevelCacheRepository();

var languages = await repo.GetLanguagesAsync();

return languages;

}

Dependency Injection

Our end goal is to be able to write unit tests for our controller methods. A prerequisite for that is to introduce dependency injection.

Follow the instructions in “ASP .NET Web API Dependency Injection with Ninject” to set up Ninject, or use any other dependency injection framework you prefer.

In your dependency injection configuration class (NinjectWebCommon if you’re using Ninject), set up the classes and interfaces you need:

private static void RegisterServices(IKernel kernel)

{

kernel.Bind<IMemoryCacheRepository>().To<MemoryCacheRepository>()

.InSingletonScope();

kernel.Bind<IRedisCacheRepository>().To<RedisCacheRepository>()

.InSingletonScope();

kernel.Bind<IMultiLevelCacheRepository>().To<MultiLevelCacheRepository>()

.InSingletonScope();

kernel.Bind<MultiLevelCache>().To<MultiLevelCache>()

.InSingletonScope();

}

Note: you can also set up an interface for MultiLevelCache if you want. I didn’t do that out of pure laziness.

Next, refactor MultiLevelCacheRepository to get the classes it needs via constructor injection:

public class MultiLevelCacheRepository : IMultiLevelCacheRepository

{

private IMemoryCacheRepository memoryCacheRepository;

private IRedisCacheRepository redisCacheRepository;

private MultiLevelCache cache;

public MultiLevelCacheRepository(

IMemoryCacheRepository memoryCacheRepository,

IRedisCacheRepository redisCacheRepository,

MultiLevelCache cache)

{

this.memoryCacheRepository = memoryCacheRepository;

this.redisCacheRepository = redisCacheRepository;

this.cache = cache;

}

public async Task<List<string>> GetLanguagesAsync()

{

var languages = await cache.GetAsync(

memoryCacheRepository.GetLanguagesAsync(),

redisCacheRepository.GetLanguagesAsync()

);

return languages;

}

}

Do the same with the controller:

public class LanguagesController : ApiController

{

private IMultiLevelCacheRepository repo;

public LanguagesController(IMultiLevelCacheRepository repo)

{

this.repo = repo;

}

// GET api/languages

public async Task<IEnumerable<string>> GetAsync()

{

var languages = await repo.GetLanguagesAsync();

return languages;

}

}

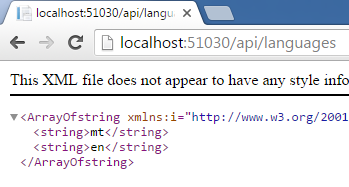

…and make sure it actually works:

Unit Test

Thanks to this design, we can now write unit tests. There is not much to test for this simple example, but we can write a simple (!) test to verify that the languages are indeed retrieved and returned:

[TestMethod]

public async Task GetLanguagesAsync_LanguagesAvailable_Returned()

{

// arrange

var languagesList = new List<string>() { "mt", "en" };

var memCacheRepo = new Mock<MemoryCacheRepository>();

var redisRepo = new Mock<RedisCacheRepository>();

var cache = new MultiLevelCache();

var multiLevelCacheRepo = new MultiLevelCacheRepository(

memCacheRepo.Object, redisRepo.Object, cache);

var controller = new LanguagesController(multiLevelCacheRepo);

memCacheRepo.Setup(repo => repo.GetLanguagesAsync())

.ReturnsAsync(null);

redisRepo.Setup(repo => repo.GetLanguagesAsync())

.ReturnsAsync(languagesList);

// act

var languages = await controller.GetAsync();

var actualLanguages = new List<string>(languages);

// assert

CollectionAssert.AreEqual(languagesList, actualLanguages);

}

Over here we’re using Moq’s Mock objects to help us with setting up the unit test. In order for this to work, we need to make our GetLanguagesAsync() method virtual in the data repositories:

public virtual Task<List<string>> GetLanguagesAsync()

Conclusion

Caching makes unit testing tricky. However, in this article we have seen how we can treat a cache just like any other repository and hide its retrieval implementation details in order to keep our code testable. We have also seen an abstraction for a multilevel cache, which makes cache fallback straightforward. Where cache levels are identical in terms of data, this approach can probably be simplified even further.